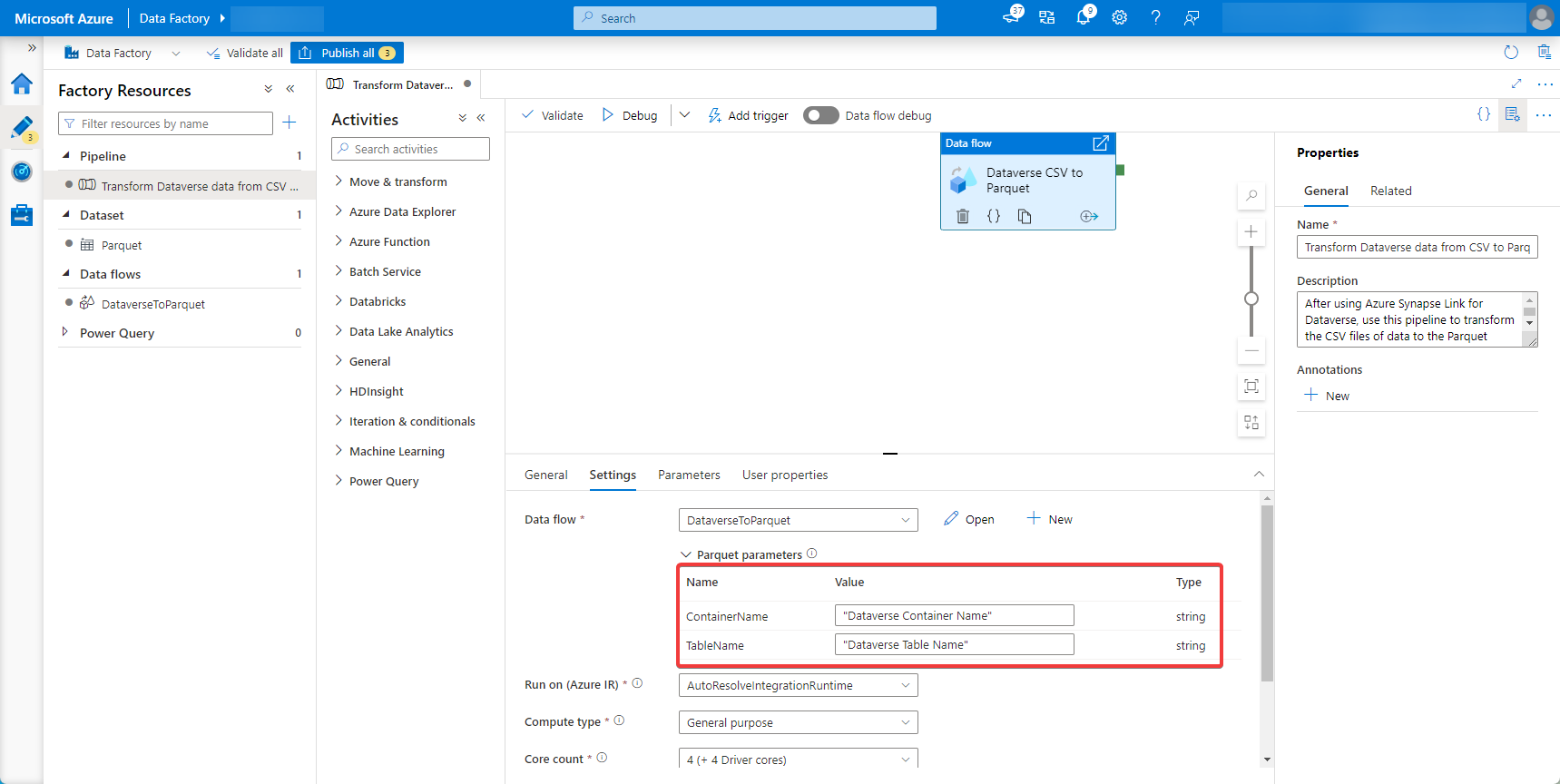

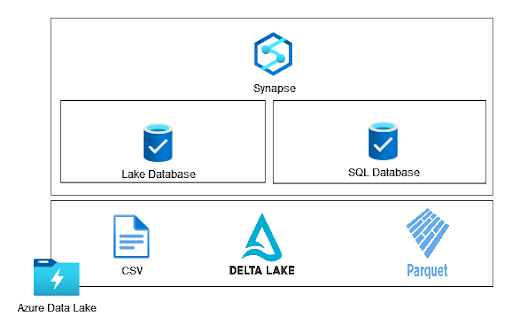

When we use Azure data lake store as data source for Azure Analysis services, is Parquet file formats are supported? - Stack Overflow

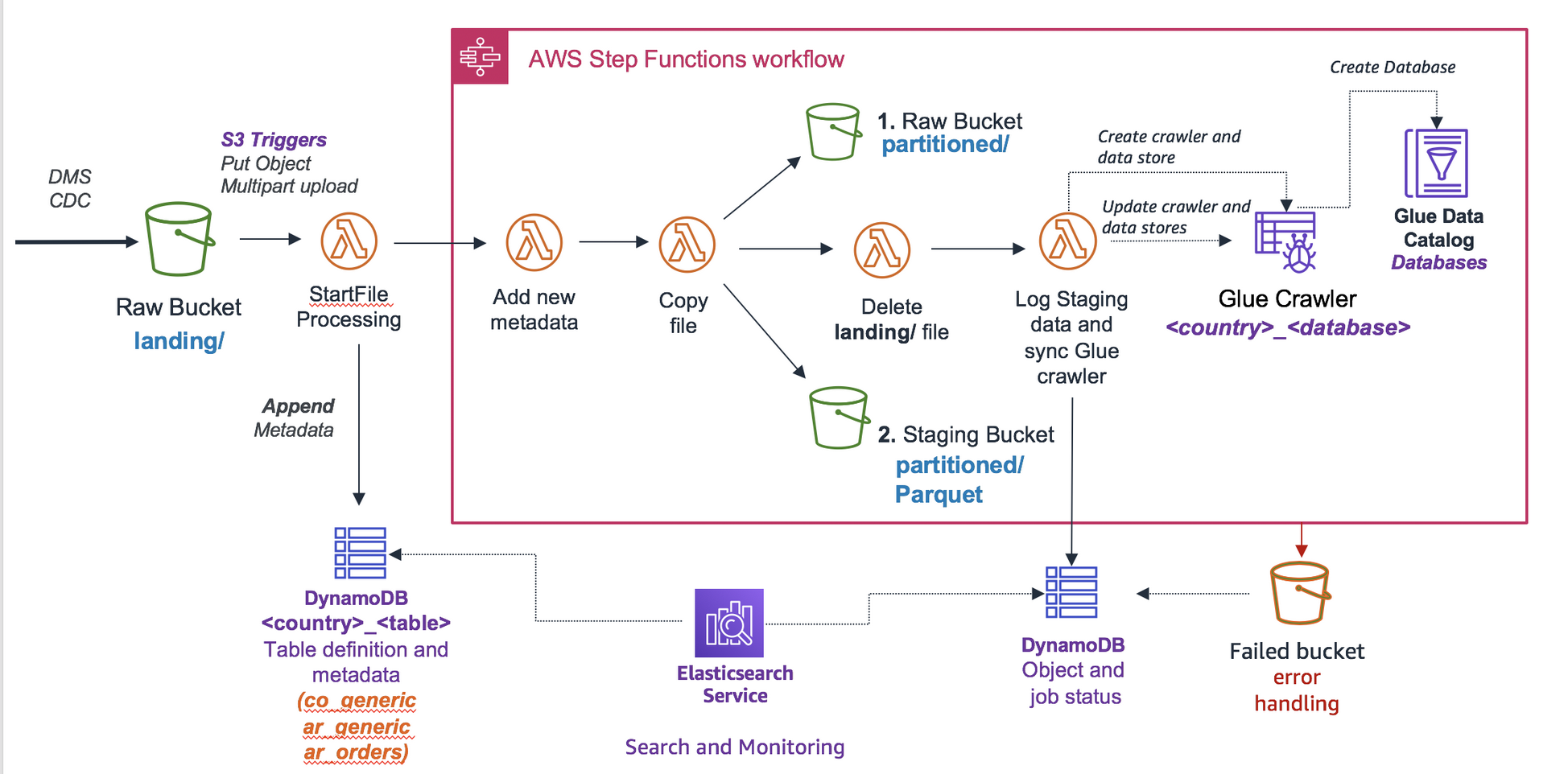

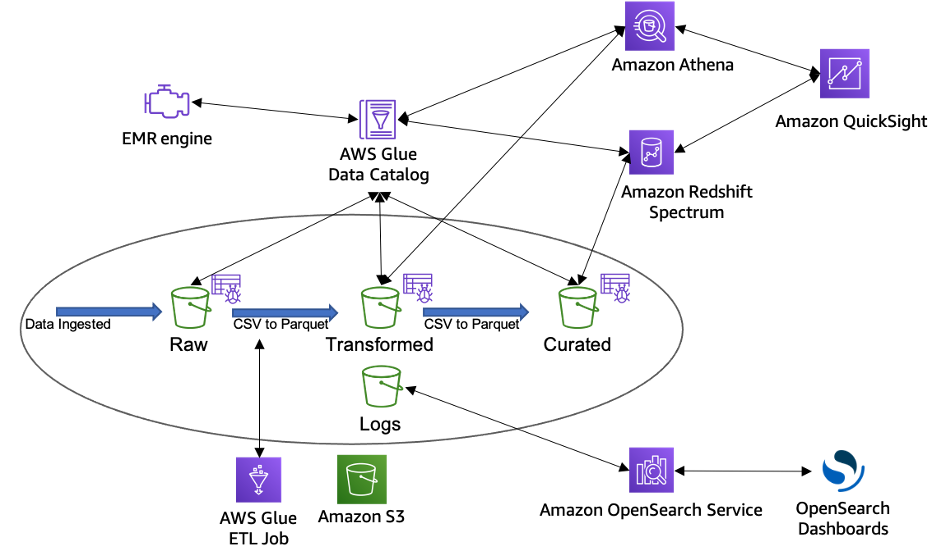

GitHub - andresmaopal/data-lake-staging-engine: S3 event-based engine to process files (microbatches), transform them (parquet) and sync the source to Glue Data Catalog - (Multicountry support)

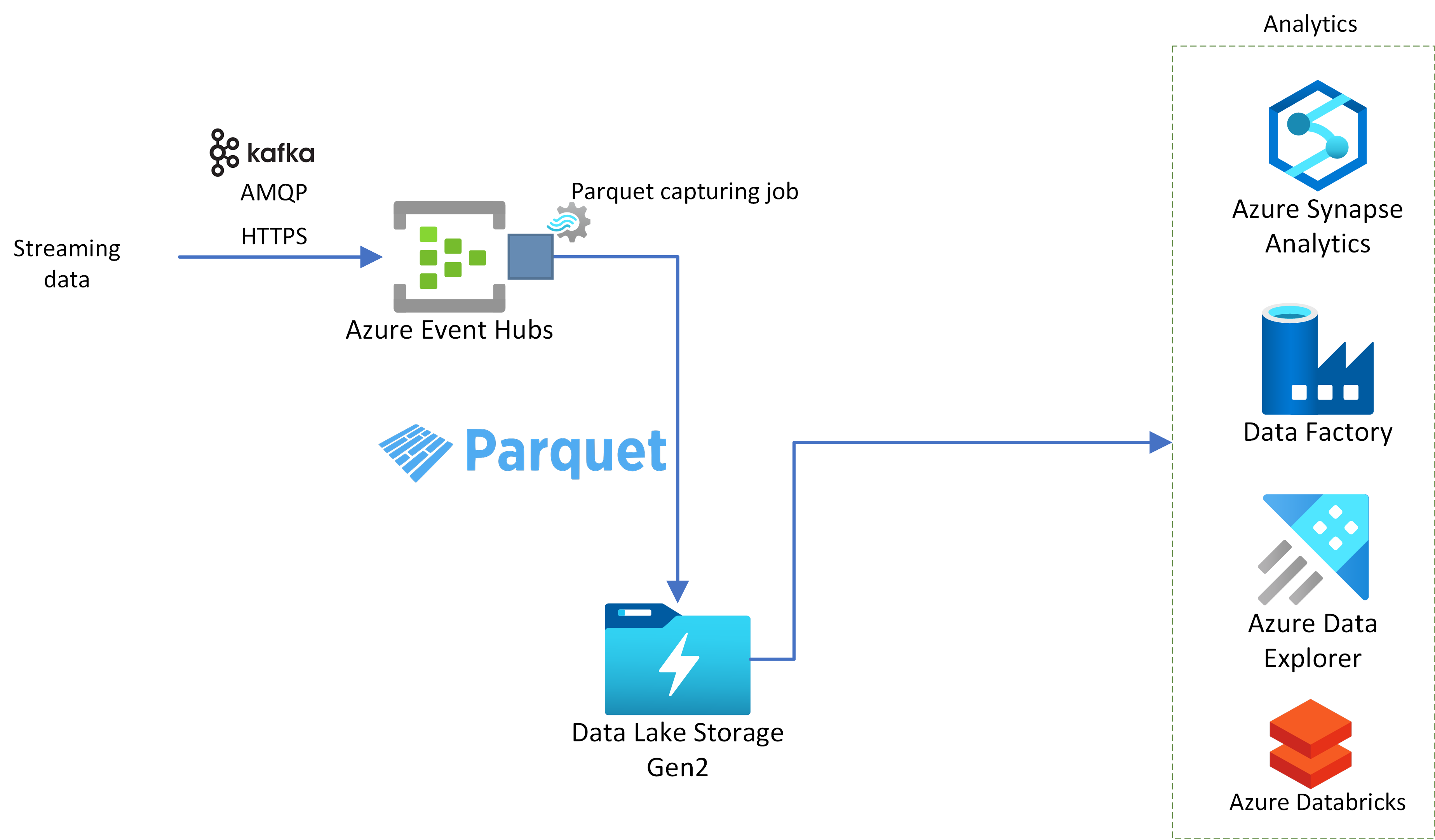

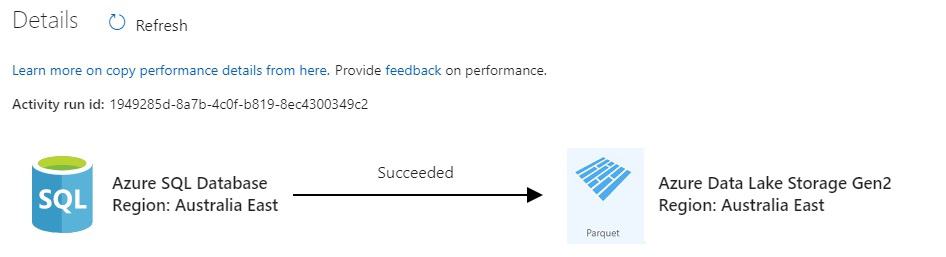

Azure Event Hubs - Capture event streams in Parquet format to data lakes and warehouses - Microsoft Community Hub

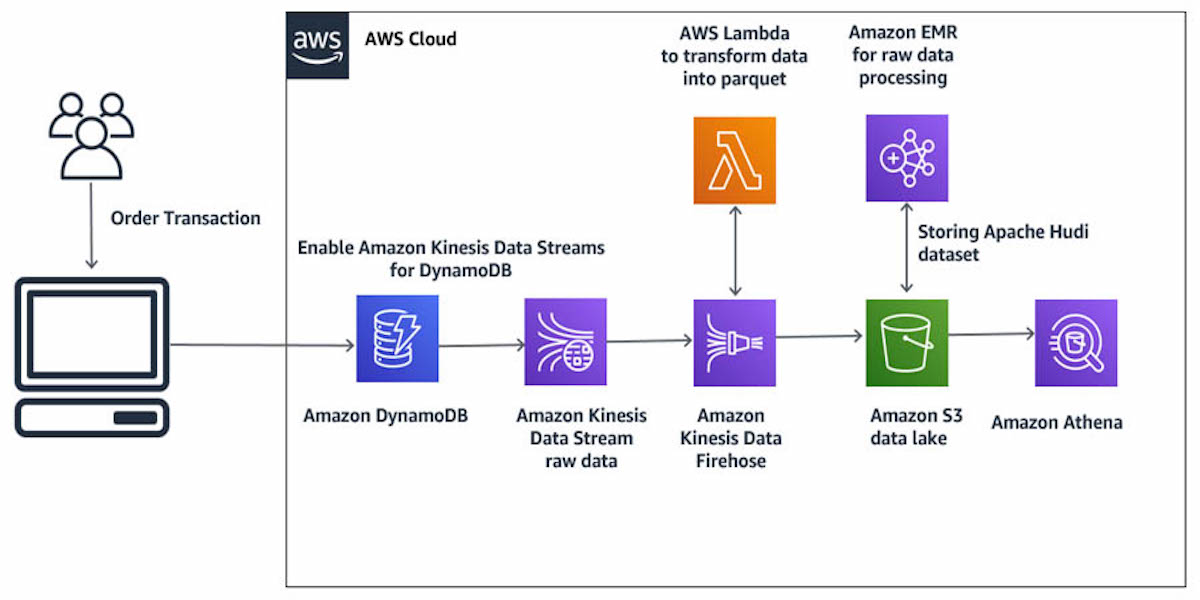

Hydrating a Data Lake using Query-based CDC with Apache Kafka Connect and Kubernetes on AWS | Programmatic Ponderings

![4. Setting the Foundation for Your Data Lake - Operationalizing the Data Lake [Book] 4. Setting the Foundation for Your Data Lake - Operationalizing the Data Lake [Book]](https://www.oreilly.com/api/v2/epubs/9781492049517/files/assets/opdl_0403.png)

![4. Setting the Foundation for Your Data Lake - Operationalizing the Data Lake [Book] 4. Setting the Foundation for Your Data Lake - Operationalizing the Data Lake [Book]](https://www.oreilly.com/api/v2/epubs/9781492049517/files/assets/opdl_0402.png)

![4. Setting the Foundation for Your Data Lake - Operationalizing the Data Lake [Book] 4. Setting the Foundation for Your Data Lake - Operationalizing the Data Lake [Book]](https://www.oreilly.com/api/v2/epubs/9781492049517/files/assets/opdl_0401.png)